Santa Claus’ Cloud-Powered Christmas: Optimizing Deliveries with Azure Multi-Hub Deployments 🎅🎄🎁

This post is part of the Festive Tech Calendar 2024 – organized by the CloudFamily. Find all speakers and sessions on the Festive Tech Calendar website.

This year we are raising money for the Beatson Cancer Charity and appreciate any contribution. Go to the Just Giving Page to find out more. Thanks!

As the holiday season approaches, Santa Claus faces the challenge of delivering millions of gifts worldwide in a single night.

To achieve this feat efficiently, Santa’s North Pole IT team has turned to modern cloud infrastructure — specifically, an Azure Multi-Hub Deployment — to optimize his global delivery network. But not only our beloved Santa faces this…

The Challenge

Azure provides the ability to deploy workloads in multiple regions. This is essential not only to be closer to end customers but also to guarantee resilience. What if an Azure region goes down—how long can we afford such downtime before it seriously impacts our business?

If customers or business locations are spread across the globe, it is crucial to ensure that necessary applications and services are deployed close to these customers (Remember: Santa has a global clientele, who would be sad if the gifts arrived too late). This improves both performance and user experience.

But how do we achieve this in Azure?

Hub-and-Spoke in a single region

A common approach is the hub-and-spoke architecture, which is well-understood. A central hub network contains key components for communication and securing workloads through a central firewall. Each workload (spoke) is connected only to the hub, ensuring clear separation of workloads, as well as centralized monitoring and security.

An alternative, full-mesh networking, where every workload is connected to every other workload, is generally discouraged as it leads to a complex and hard-to-manage network structure.

So far, so good. Let’s scale.

Hub-and-Spoke across multiple regions

What if we operate in multiple regions? It makes sense to retain the hub-and-spoke model in these regions, with each region having its own hub network connected to its respective spoke networks. But how do we connect the hubs across regions, ensuring communication between workloads in different regions?

Enter Full-Mesh networking – but for the right purpose

While we avoid the full-mesh model within a single region, it is beneficial to connect all hub networks across regions. This enables fast communication between workloads in different regions. However, we still avoid direct connectivity between all workloads. We aim to maintain workload segregation and centralized monitoring.

In short: Use a full-mesh model for hub networks and a hub-and-spoke model for spoke networks.

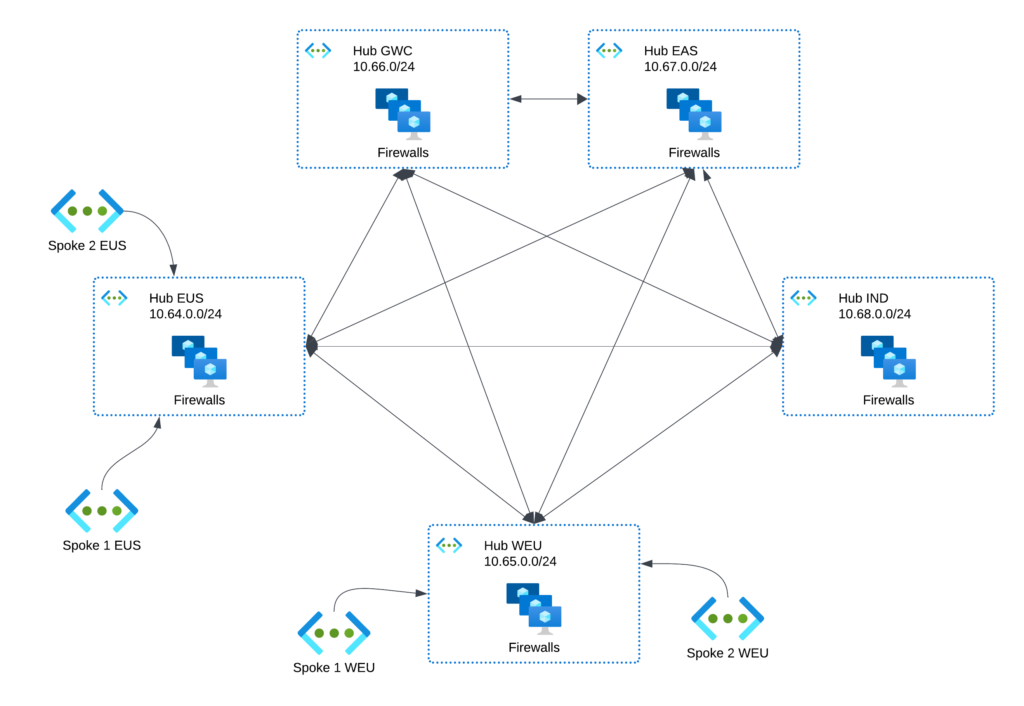

Let’s have a look at a fully deployed Multi-Hub environment (Simplified illustration):

You can see, that each Hub is peered with each other hub, but spokes are only peered to the regional hub and also never to other spokes.

Key Considerations

VNET Address Planning

Why is this important?

Proactive address planning is foundational for achieving our scenario. We need to avoid address conflicts between VNETs to ensure seamless communication and also need contiguous ranges. I’ll explain why.

How do we proceed?

- Define a sufficiently large address space for the entire Azure environment, including all regions.

- Allocate a unique address range for each region.

- Subdivide each region’s address range for hubs and spokes.

Avoid overlaps and ensure continuous address ranges within each region to simplify routing table configurations.

Example IP Address Planning

| Description | Address range |

|---|---|

| Azure Environment | 10.64.0.0/13 |

| 🌎 Azure Region East US | 10.64.0.0/16 |

| – Hub Network EUS | 10.64.0.0/24 |

| – Spoke Networks EUS | 10.64.<1-255>.0/24 |

| 🌍 Azure Region West Europe | 10.65.0.0/16 |

| – Hub Network WEU | 10.65.0.0/24 |

| – Spoke Networks WEU | 10.64.<1-255>.0/24 |

| 🌍 Azure Region Germany West Central | 10.66.0.0/16 |

VNET Peering

Within a single region, spokes connect only to the hub, avoiding direct peering between spokes.

Across multiple regions, all hubs are peered with one another. This ensures traffic from a spoke in one region to a spoke in another region always flows through the respective hubs, avoiding intermediate hubs.

Hub Components

Azure VNETs are non-transitive, meaning direct connectivity is required between networks for communication.

Take for example those VNET peerings:

VNET A can communicate with VNET B, and VNET B with VNET C, but VNET A cannot directly communicate with VNET C unless there’s a routing component in VNET B.

When traffic flows from a spoke in one region to a spoke in another, it must pass through both regional hubs. Therefore, hubs must have the necessary components to route traffic between them.

Recommended Components:

- VPN or ExpressRoute Gateway

- Azure Firewall

- Third-party virtual appliances (e.g., Check Point, Palo Alto, Fortinet)

- VM acting as a router (e.g., checkout the AzureVM-Router by Daniel Mauser)

Best Practice: Use Azure Firewall or third-party virtual appliances for centralized monitoring and security.

What about other Components in Hubs?

Avoid adding other components to hubs. Deploy services like DNS, domain controllers, or … Zscaler Private Access Connectors … whatever … in dedicated spokes to simplify routing.

Routing

Routing is a critical part of this scenario. We need to ensure that traffic from a spoke in one region to a spoke in another region passes through the hubs in the respective regions. This requires appropriate configuration of the routing tables in the VNETs. Why is this configuration necessary? I’ll answer that in the next section.

What routes does a VNET know?

An Azure VNET typically knows the following routes:

- Local routes: All address ranges defined within this VNET.

- Routes to peered VNETs: All address ranges defined in directly peered VNETs.

- Routes learned from a gateway (VPN or ExpressRoute).

- Default route:

0.0.0.0/0 -> Internet. - Some private network ranges with next hop to

None(We will ignore it here because it is not relevant to our scenario)

The key here is point 2. Because, a VNET only knows the address ranges of directly peered VNETs. This means a VNET does not know it could communicate with a third VNET via another VNET.

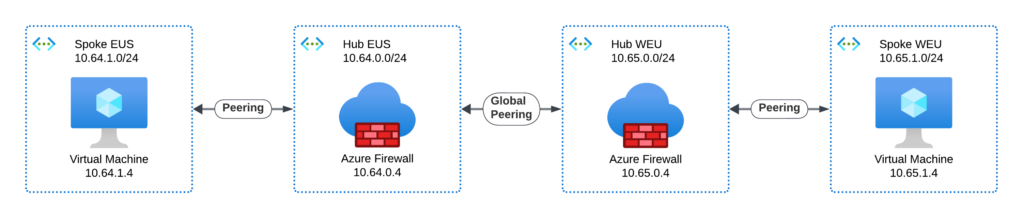

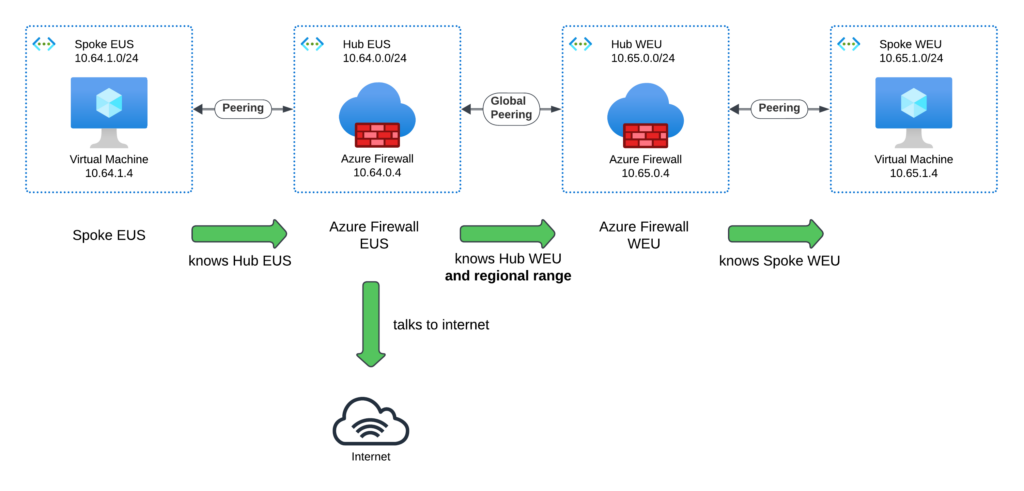

Our example is just showing two regional Hubs in East US and West Europe and only one Spoke per region to make it easier to understand :

In this example, the Routing Table for Spoke EUS looks like this:

| Address prefixes | Next hop type | IP |

|---|---|---|

| 10.64.1.0/24 | Virtual Network | – |

| 10.64.0.0/24 | VNET Peering | – |

| 0.0.0.0/0 | Internet | – |

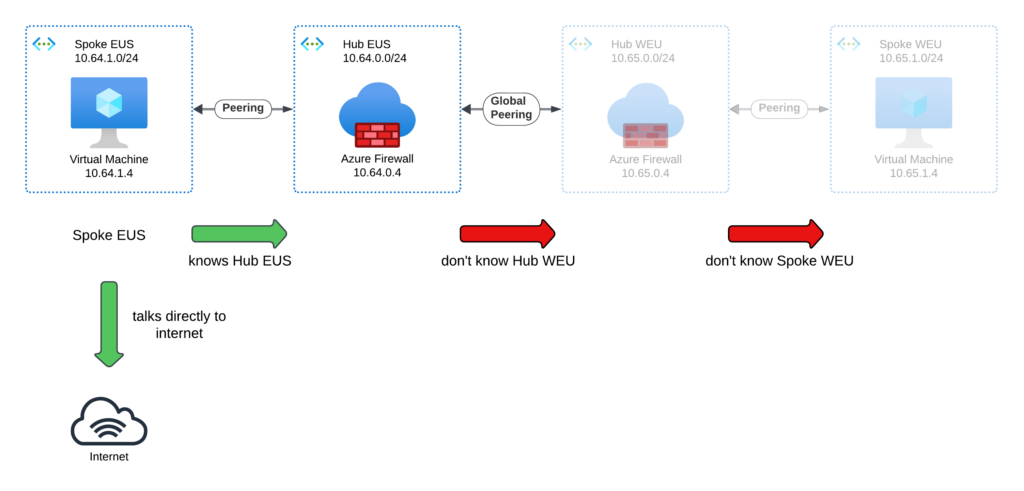

Following what we know – since the Spoke EUS is only peered with the Hub EUS: The Spoke EUS knows only the address ranges of Hub EUS (10.64.0.0/24). It does not know the address ranges of Hub WEU, because they’re not directly peered. Thus, Spoke EUS cannot send traffic to Spoke WEU or Hub WEU. Additionally, Spoke EUS can directly communicate with the internet, which we want to avoid — this is why we have firewalls in the hubs.

Direct the traffic to the regional hub

How do we solve this problem?

Each spoke in a region gets a routing table that directs traffic to the regional hub. This is straightforward. In the routing table, we override the default route:

- Address prefix:

0.0.0.0/0 - Next hop type:

Virtual Appliance - IP:

Hub EUS Firewall IP(10.64.0.4 in this case).

From now on, any traffic from a spoke that is not destined for a system within the spoke itself is routed through the hub.

| Address prefixes | Next hop type | IP |

|---|---|---|

| 10.64.1.0/24 | Virtual Network | – |

| 10.64.0.0/24 | VNET Peering | – |

| – | ||

| 0.0.0.0/0 | Virtual Appliance | 10.64.0.4 |

Observant readers may notice that the spoke network still has a valid and functional route to the hub’s entire address range because of the peering. This route is more specific than the default route.

Is this a problem? Only if additional components have been deployed in the hubs (which we aim to avoid). These additional components can be directly reached by a spoke system without passing through the firewall, as the spoke knows the route. To prevent this, we would also need to override the hub’s address range in the spoke’s routing table. This is possible but not ideal.

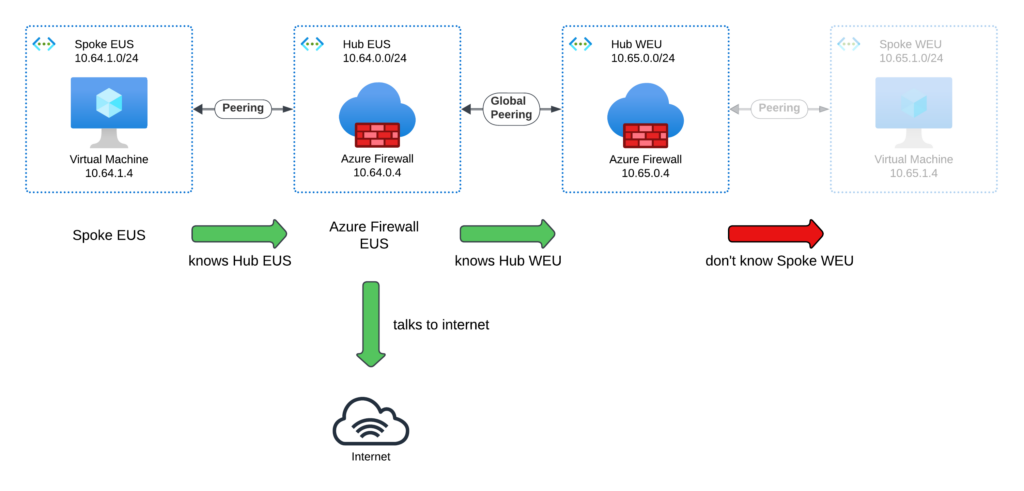

Great, we can now communicate between Spoke EUS and Hub EUS Firewall. Also the Internet traffic flows through our firewall.

But how do we reach the Spoke WEU?

Hub EUS knows the following routes:

- Local routes: The address range of Hub EUS.

- Routes to peered VNETs: The address ranges of Spoke EUS and Hub WEU.

- Routes learned from a gateway (VPN or ExpressRoute).

- Default route:

0.0.0.0/0 -> Internet.

| Address prefixes | Next hop type | IP |

|---|---|---|

| 10.64.0.0/24 | Virtual Network | – |

| 10.64.1.0/24 | VNET Peering | – |

| 10.65.0.0/24 | Global VNET Peering | – |

| 0.0.0.0/0 | Internet | – |

What’s missing? The route to the address range of Spoke WEU (10.65.1.0/24).

Regional routes

Do we simply add this route to the spoke?

It’s possible, but do we want to add a route for every new spoke in any region, in every hub? Even with Infrastructure as Code, this would create a significant workload, not to mention the potential for confusion and errors.

Fortunately, our careful IP address planning pays off here.

We already know the entire address range of the other regions. We can add a route for the entire address range of each region, which requires adding just one route per region.

In the routing table for Hub EUS, we add:

- Adress Prefix:

10.65.0.0/16 - Next hop type:

Virtual Appliance - IP:

Hub WEU Firewall IP(10.65.0.4 in this case).

We don’t need to specify which spoke network in WEU we want to reach because we know Hub WEU will route the traffic correctly—it is peered with the spokes in its region.

| Address prefixes | Next hop type | IP |

|---|---|---|

| 10.64.0.0/24 | Virtual Network | – |

| 10.64.1.0/24 | VNET Peering | – |

| 10.65.0.0/24 | Global VNET Peering | – |

| 0.0.0.0/0 | Internet | – |

| 10.65.0.0/16 | Virtual Appliance | 10.65.0.4 |

Rinse and repeat

We apply this approach to all hubs in all regions. For each hub, we add a route for the entire address range of the other regions. Additionally, for each spoke, we add a route to the regional hub.

Summary

With careful planning of IP address ranges and routing tables, we can implement a multi-hub scenario in Azure. This ensures that workloads in different regions can communicate while traffic passes through the required security components.

…and for Santa’s IT team?

Santa’s IT team can in fact implement a nice multi-hub scenario in Azure. This setup here allows Santa’s regional operations — from toy workshops to delivery hubs — seamlessly communication across the globe. At the same time, all traffic passes through the North Pole’s advanced security systems, ensuring that the Naughty/Nice lists and other critical data remain protected.

Hope this approach was useful for you.

🎄 Happy Holidays Y’all

Great article with lots of practical tips for implementation! Many thanks Christoph – we look forward to more!